Introduction

Real-time network data processing applications are often concerned with peak latencies and jitter. Part of that jitter could come from the variation in congestion as packets propagate across a network, but another important component of that jitter comes from the host itself, especially in the limit of zero congestion. Many articles (for example, here) point to intermittent OS processes such as interrupt handling and page reclaims as a source. While FPGAs offer the best performance in terms of host jitter, developing FPGA programs is hampered by long compile times and difficulty in debugging. Because of this, developers often opt to continue creating applications designed to run on conventional CPUs or GPUs. Previous articles in this series have focused on how to achieve high throughputs (when using either a GPU or a CPU to manage the network card), but here we discuss technologies to minimize host jitter in the context of the second approach, where the CPU manages the NIC. Fixstars Solutions updates this series regularly as it continues to develop lightning-kit, its high-throughput, low-latency TCP data transfer library.

Background

For real-time application developers, it is important to characterize acceptable jitter performance. In particular, a threshold for acceptable latency should be defined. Eliminating unacceptable-latency events entirely is generally challenging, so an acceptable frequency of occurrence for such events should also be set.

Various real-time-focused devices exist, such as the FPGA, which offers the lowest peak latency but programming them is complex. It is also possible to use real-time processors for edge computing (ARM, Intel).

These are both good options, but our target in this case was a general-purpose CPU. This is because we observe that our clients are using these CPUs in conjunction with 100GbE NICs, and they have a limited opportunity to completely refit their systems. It is difficult to utilize the NIC while achieving high throughput and low latency, so we made an effort to reduce the jitter on it while still maintaining throughput performance.

Methods

The observed jitter on the CPU is caused by shared hardware resources (CPU core, CPU cache, memory) used by other processes, device accesses, interruptions from external devices, and even system temperature fluctuations. Here we demonstrate methods to mitigate the impact of each of these factors.

Processes

One of the primary causes is interference from other processes. The most effective solution involves terminating unnecessary processes and disabling redundant services. For instance, stopping processes like kubelet, rshim, containerd, dockerd, and others significantly reduces jitter. However, certain processes are essential for kernel tasks and must remain active. Some kernel processes can be relocated to a different CPU core where low-latency programs are running. This capability requires a Linux version that supports CPU core isolation using the isolcpus kernel parameter.

Additionally, an RT Linux kernel can also decrease the frequency of unacceptable jitter events. In our system, while general Linux kernels exhibited numerous latencies exceeding 400 microseconds, RT kernels reduced the majority of latencies to under 200 us (see detailed results below).

When experiencing issues with unnecessary processes on the target CPU core, it is helpful to temporarily take the CPU offline. This action can prompt the OS to migrate to migrate the processes to other cores. This can be accomplished with the following trick:

do echo 0 | sudo tee /sys/devices/system/cpu/cpu${TARGET_ID}/online # offline

# after several seconds

do echo 1 | sudo tee /sys/devices/system/cpu/cpu${TARGET_ID}/online # onlineThis helpful article also discusses CPU isolation in some detail.

Interrupts

Another significant cause of jitter comes from device-initiated interrupts. These interrupts can be handled by other cores not running your application to reduce undeterministic CPU load. The corresponding kernel parameters for this purpose are rcu_nocbs, nohz_full, and irqaffinity. One can determine the number of interruptions handled by each CPU core using the following command:

cat /proc/interrupts

cat /proc/softirqsThe Linux kernel also supports workqueues, where worker threads execute asynchronous tasks in the queue on a given core, which can obviously contribute to potential jitter. The perf tool can be used to measure the processes running concurrently with your application as follows:

perf record -e "sched:sched_stat_runtime" -C <cpu core id> <your application>

perf record -e "sched:sched_switch" -C <cpu core id> <your application>

perf record -e "workqueue:*" -C <cpu core id> <your application>It is possible to set a workqueue affinity mask. In our system, there seems to be (???????) effect on performance. For example, the command below would prevent cores 16-19 and 48-51 from having a work queue.

sudo find /sys/devices/virtual/workqueue -name cpumask -exec sh -c 'echo "ffff0fff,ffff0fff" > {}' ';'Temperature

Our experiments demonstrate that the temperature of the NIC and CPU affects the occurrence of high latency events, probably due to thermal throttling. We observed frequent jitter at temperatures above 70°C, while temperatures below 40°C resulted in minimal jitter.

Memory Interleaving

Some systems can adjust the methods used for memory interleaving, which is used to parallelize memory accesses and achieve a higher throughput. This method can lead to increased resource sharing among processes, which impacts jitter. To reduce jitter, it is preferable to separate resources. In our system, we could configure the number of memory modules grouped together for interleaving.

Other Possible Factors

In Linux, it is possible to control a CPU’s operating state. By default, it is configured for energy-saving rather than high performance. Some jitter may arise from fluctuations in CPU frequency as it attempts to reduce power consumption. Setting the CPU to operate at its highest performance state can minimize these frequency fluctuations and result in more consistent performance.

In our system, turbo boost was enabled, and we observed a stable CPU frequency during application execution, thus this setting did not impact jitter performance in our case. To put a CPU into performance mode, use the following command:

sudo find /sys/devices/system/cpu -name scaling_governor -exec sh -c 'echo performance > {}' ';'In addition, some forum discussions mention the influence of iommu on jitter, but in our environment, the impact is very marginal. Lastly, Mellanox has its own tuning command named mlnx_tune. In our experiments, the results of using this tool were mixed, but it is worth exploring due to its ease of use.

Experimental Results

System Topology

Same as in the previous article.

Testing Environment

- ubuntu 20.04.6, Linux 5.4.0-182-generic

- MLNX_OFED_LINUX-23.10-2.1.3.1-ubuntu20.04-x86_64.iso

- dpdk-stable-22.11.2

Methodology

To characterize the jitter in the server, a client was used to send packets to a server. A time point was measured before sending each ACK packet, and the time interval between ACKs was recorded. On the client side, packets are continuously transmitted to minimize gaps caused by the absence of packets.

The specific cause of each jitter event can vary wildly based on CPU conditions and active processes, so it is difficult to locate the source of each event. Therefore, we aimed to compare results between environments with and without jitter tuning, where the tuning methods outlined above were applied. In particular, the methods listed below were used. To average out the effects of short-lived CPU states could have on the analysis, each test was conducted over a 1-hour duration.

- Fan speed was set to maximum in order to achieve the coolest temperature. NIC temperature was observed to be under 50 ℃ during the test.

- A bare Ubuntu server with no bloatware installed was used, along with DPDK.

- The application CPU affinity was set such that the application runs on the same socket of NIC.

- A real-time Linux kernel was used.

- Unnecessary processes and services were killed and stopped, respectively. In particular, services

kubelet,rshim,containerd, anddockerdwere stopped. - The CPU running the application (number 16 in our case) was isolated by passing the kernel parameter

isolcpus=16-31,48-63. CPUs on the same socket as core 16 were also isolated. - Interrupts were isolated by passing kernel parameters

rcu_nocbs=16-31,48-63,nohz_full=16-31,48-63, andirqaffinity=0-15. - Relevant cores (19-31, 48-63) were relieved of work queue duty by running

sudo find /sys/devices/virtual/workqueue -name cpumask -exec sh -c 'echo ".0000ffff,0000ffff" > {}' ';' - The default memory interleaving method was used.

- Before starting the application, the target CPU core was put offline for a few seconds by running

do echo 0 | sudo tee /sys/devices/system/cpu/cpu16/online

Results

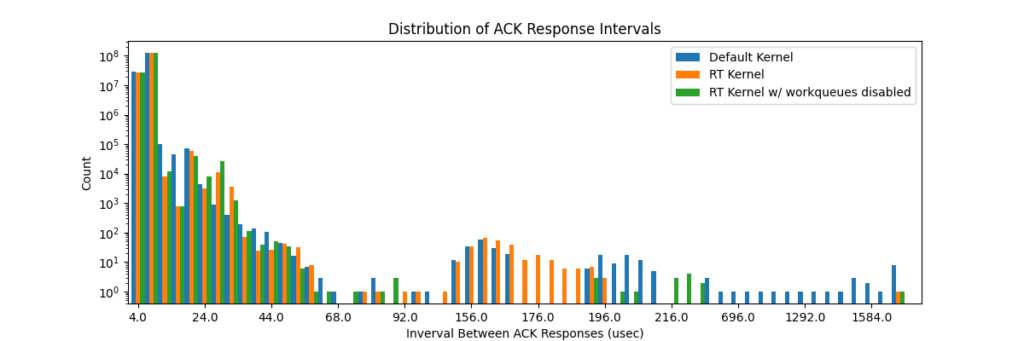

Below is a histogram of data showing the frequency of a given interval between ACK responses for the default Linux kernel, the RT kernel, and the RT kernel with workqueues disabled. Note that the frequencies are displayed on a logarithmic scale and the horizontal axis is nonlinear. Qualitatively, one can see that the RT kernel has very few events with response times over 200 us compared to the default kernel. Furthermore, we see that the RT kernel with work queues disabled (green) has very few events with a response time of over 100 us compared to the unaltered RT kernel. The throughputs were the same at 92Gbps.

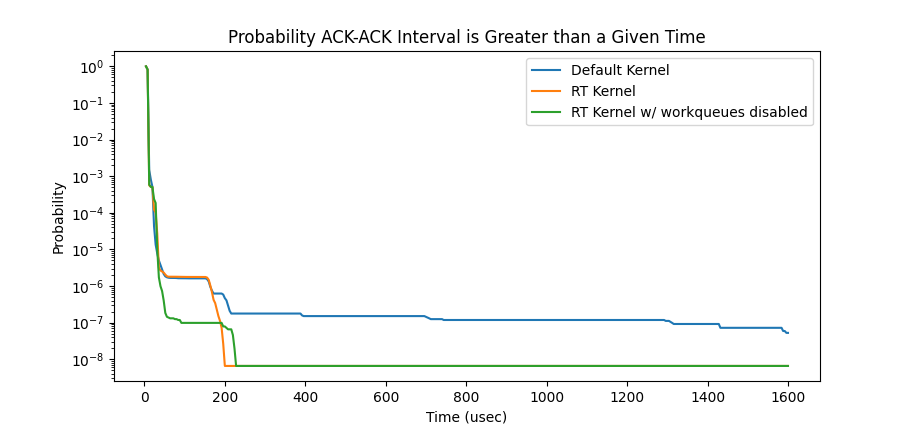

Perhaps more useful is a sort of cumulative distribution of events, which allows one to characterize the probability of rare, high-latency responses. The graph below shows the probability of an ACK-ACK response time being greater than a given abscissa value. Obviously, the probability of the response time being greater than 0 is 1, and the probability goes down from there. Again, the graph shows that the default kernel has a much higher probability of high latency events, and that the RT kernel with workqueues disabled achieves superior performance when considering response times less than 100 us.

Conclusion

This article has demonstrated Fixstars Solutions’ techniques to remove jitter events causing unacceptable ACK-ACK intervals. Our methods, which involved CPU isolation, interrupt isolation, and work queue disabling, significantly reduce the probability of ACK delays greater than 250 us compared to the default Linux kernel. While this performance meets our current customer’s needs, we continue to investigate ways to further minimize jitter. Interested readers should check back often for more articles in our High-Performance Networking series, where Fixstars documents innovations made as it develops lightning-kit, an open-source solution for your networking needs!