* This blog post is an English translation of an article originally published in Japanese on March 12, 2020.

* The content of this article is the result of an approximately one-month internship in February 2020.

Do you know about Unikernels? I do. A Unikernel is a machine image created by linking an application with only the bare minimum necessary functions of a library OS, designed to run in a single address space directly on hardware or a hypervisor.

In this article, we explore the potential of Unikernels as a means to achieve desired functionality with a minimal machine image size on embedded systems, such as microcontrollers for automotive applications. We will provide an overview and investigate Unikernel projects existing as of February 2020.

What is a Unikernel?

The term “Unikernel” was proposed in the 2013 paper “Unikernels: Library Operating Systems for the Cloud” [1], alongside MirageOS, a Unikernel project implemented in OCaml.

The background for this proposal was the observation that while users in cloud services commonly run single-purpose applications in VMs, these VMs typically use general-purpose OSes, leading to significant waste in terms of image size and the range of functions used.

Thus, [1] defines the purpose of a Unikernel as generating a machine image that achieves the desired functionality with the minimal configuration as a single VM on a hypervisor, which serves as the foundation for cloud services.

As stated at the beginning of this article, a Unikernel is a machine image that runs only a single-function application in a single address space. It is created by linking only the necessary object files from a provided library OS with the application’s object files.

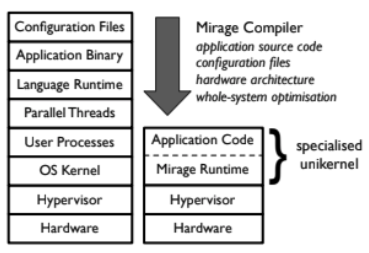

MirageOS, implemented in [1], is designed to run on hypervisors like Xen and KVM. As shown in the diagram below, it has a very simple configuration where the Unikernel, as a single binary including the application, runs directly on the hypervisor.

A Unikernel is simply a single binary linking a library OS and an application, where the library OS functions and the application run in the same privilege mode. This means that while applications running on a general-purpose OS issue system calls to use OS functions, in a Unikernel, OS functions are accessed by simple function calls.

The address space is also singular, and its mode of operation is the same as an application running on bare metal. From this perspective, a Unikernel can be considered a bare-metal machine image that can be built with only the effort of writing application code, given that a hardware abstraction layer and libraries providing major OS functionalities are somewhat prepared. It differs slightly in that it adheres to the memory mapping defined by the Unikernel.

Since only the functions necessary for the application are linked, the image size is naturally smaller compared to a VM with a general-purpose OS. Furthermore, there is no overhead from system calls or multiprocessing, and optimizations can be applied across both application code and library OS code, promising performance improvements for each application. Boot times are also short, making it highly suitable for scenarios where VMs need to be launched frequently. Another advantage is a smaller memory footprint. Additionally, by stripping unnecessary OS functions, the attack surface is significantly reduced, and since it differs for each built application, improved security can also be expected.

Potential of Unikernels in Automotive Systems

As mentioned at the beginning, the purpose of this Unikernel investigation is to explore the future possibilities of OS layer architecture in automotive systems. This chapter will discuss the demands arising in automotive systems and the potential of Unikernels in that context.

Automobile control was once performed by mechanical mechanisms, but current automobiles use electrical control, with microcontrollers called ECUs (Electric Control Units) playing a central role. Each ECU is given a specific role, and modern cars can have over 100 ECUs. ECUs communicate with each other via protocols like CAN (Control Area Network) to achieve the overall functionality of the vehicle.

However, the increase in ECUs due to performance enhancements causes problems in terms of cost, space, and complexity, leading to a demand for ECU integration [2]. Virtualization by a hypervisor is used to consolidate functions previously held by separate ECUs onto a single ECU. This requires an OS with standard high-level APIs, running everything from best-effort systems like infotainment to control systems requiring strict real-time performance, each as a separate VM.

The OS running on control system VMs is converging towards AUTOSAR, a European automotive OS standard. Therefore, it’s unlikely that the functional development part of control systems will be replaced by a new platform. However, for VMs running applications that require high-level APIs of a general-purpose OS like Linux, such as infotainment, Unikernels could potentially be introduced [3].

As mentioned earlier, Unikernel images are small, and their memory footprint is also small, making them a good match for resource-constrained embedded systems like automotive systems. Also, as previously stated, by including only the bare minimum functions in the machine image, the attack surface can be reduced, leading to improved security.

Trying to Run MirageOS (It Didn’t Work)

Since I had a Xen environment running on an aarch64 microcontroller, I decided to try running MirageOS in a domU domain. (I chose MirageOS because it was the first reference implementation for the Unikernel concept and is still actively developed). I set up a cross-compilation environment for aarch64 (using QEMU user emulation, etc.), installed MirageOS, and built a “hello world” app for Xen.

$ sudo apt-get update

$ sudo apt-get install opam

$ opam init

$ opam switch create <version>

$ eval `opam config env`

$ opam install mirage$ git clone git://github.com/mirage/mirage-skeleton.git

$ cd mirage-skeleton/tutorial/hello

$ mirage configure -t xen

$ make depend

$ makeHowever, the build of dependent packages via make depend failed. Looking at the error message:

start_info;

^

In file included from blkfront.c:7:0:

/home/ubuntu/.opam/4.06.0/.opam-switch/build/minios-xen.0.9/arch/arm/include/os.h:155:2: error: #error "Unsupported architecture"

#error "Unsupported architecture"

^

minios.mk:78: recipe for target '/home/ubuntu/.opam/4.06.0/.opam-switch/build/minios-xen.0.9/blkfront.o' failed

make: *** [/home/ubuntu/.opam/4.06.0/.opam-switch/build/minios-xen.0.9/blkfront.o] Error 1The MiniOS build failed, leaving the ominous message “Unsupported architecture.”

So, I asked about aarch64/Xen support on the MirageOS issue tracker and received a reply that MiniOS, which Xen-targetted MirageOS depends on, does not currently support aarch64/Xen…

Since I really wanted to conduct an operational experiment on my aarch64/Xen environment, I searched for records of porting efforts or information to perform one.

The Issue of MiniOS Not Supporting aarch64/Xen

After gathering information, I found a repository and an announcement for a port that ran MiniOS in an aarch64/Xen environment. I tried building according to the mailing list announcement.

$ git clone https://github.com/baozich/mini-os.git

$ git submodule add -f https://git.kernel.org/pub/scm/utils/dtc/dtc.git dtc

$ cd dtc

$ git checkout -b tag v.1.4.5

$ cd ..$ CONFIG_TEST=y CONFIG_START_NETWORK=n CONFIG_BLKFRONT=n CONFIG_NETFRONT=n \

CONFIG_FBFRONT=n CONFIG_KBDFRONT=n CONFIG_CONSFRONT=n CONFIG_XC=n \

MINIOS_TARGET_ARCH=arm64 CROSS_COMPILE=aarch64-elf- makeHowever, the build failed. Why…

In file included from /usr/include/newlib/sys/reent.h:15:0,

from /usr/include/newlib/stdlib.h:18,

from dtc/libfdt/libfdt_env.h:57,

from dtc/libfdt/fdt.c:51:

/usr/include/newlib/sys/_types.h:83:9: error: unknown type name '_LOCK_RECURSIVE_T'

typedef _LOCK_RECURSIVE_T _flock_t;

^

minios.mk:69: recipe for target '/home/takahiro_ishikawa/work/mini-os/dtc/libfdt/fdt.o' failed

make: *** [/home/takahiro_ishikawa/work/mini-os/dtc/libfdt/fdt.o] Error 1While searching for various causes, I found a reply regarding MiniOS ARM support on the Xen user mailing list (xen-users@lists.xensource.com). According to that reply:

Support for Arm in Mini-OS is in particularly bad step and I don’t expect any update there are as we are focusing to Unikraft.

It seems the Xen Project is no longer focusing on MiniOS and is instead concentrating on a Unikernel called Unikraft. This was unfortunate. At this point, I gave up on the MirageOS operational experiment and decided to switch focus to investigating and experimenting with Unikraft.

What is Unikraft?

Unikraft is a set of libraries for building Unikernels, which began as an experimental project at NEC Labs in early 2017 and was adopted as a Xen Incubator Project in October 2017.

Unikraft identifies the difficulty of porting existing applications to build as Unikernels as the main reason for the lack of widespread adoption of existing Unikernels. It was launched with the goal of enabling existing applications to be built as Unikernels with almost no porting effort.

Unikraft’s ideal state is described as:

- All libraries that an application might use are available.

- At build time, only the necessary libraries are linked from this library set.

- The minimal execution image for the application can be automatically built for the target platform.

A secondary goal is to enable users to contribute by providing libraries to a common codebase, rather than each spending time on porting work for their own projects.

There is also an objective to consolidate the efforts currently dispersed among various (especially MiniOS-based) Unikernel projects in the Xen community into the Unikraft project.

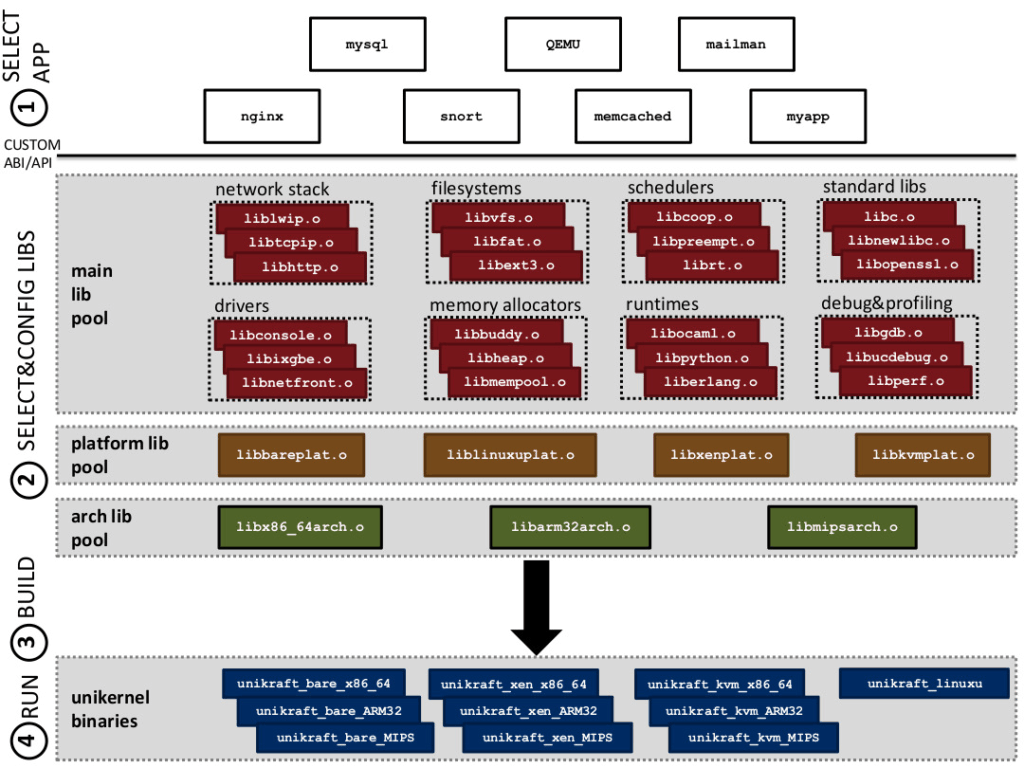

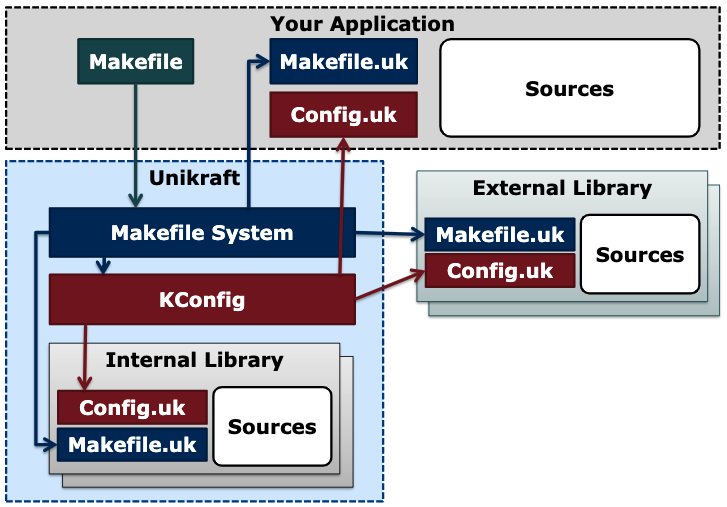

Below is a conceptual diagram showing the libraries provided by Unikraft and the process by which a Unikernel build image is generated.

The Unikraft project provides the main Unikraft repository and repositories for numerous official libraries. This time, to see how Unikernel builds are performed with Unikraft, I will follow the tutorial and try building the httpreply app, which is provided as one of the official sample apps.

Locally, clone the respective repositories from the Unikraft Project repos to create the following directory structure:

├── apps

│ └── httpreply

├── libs

│ ├── lwip

└── unikraft// Clone the main Unikraft repo

$ git clone git://xenbits.xen.org/unikraft/unikraft.git

// Clone external library repo (lwip)

$ git clone git://xenbits.xen.org/unikraft/libs/lwip.git

// Clone sample app

$ git clone git://xenbits.xen.org/unikraft/apps/httpreply.gitLooking at the contents of the main Unikraft repository, it is largely composed of architecture-dependent code, platform-dependent code (Xen, KVM, etc.), and the main library set.

In addition to the libraries hosted in this main repository, any external libraries required by the application must be cloned into the libs directory in the directory tree shown above.

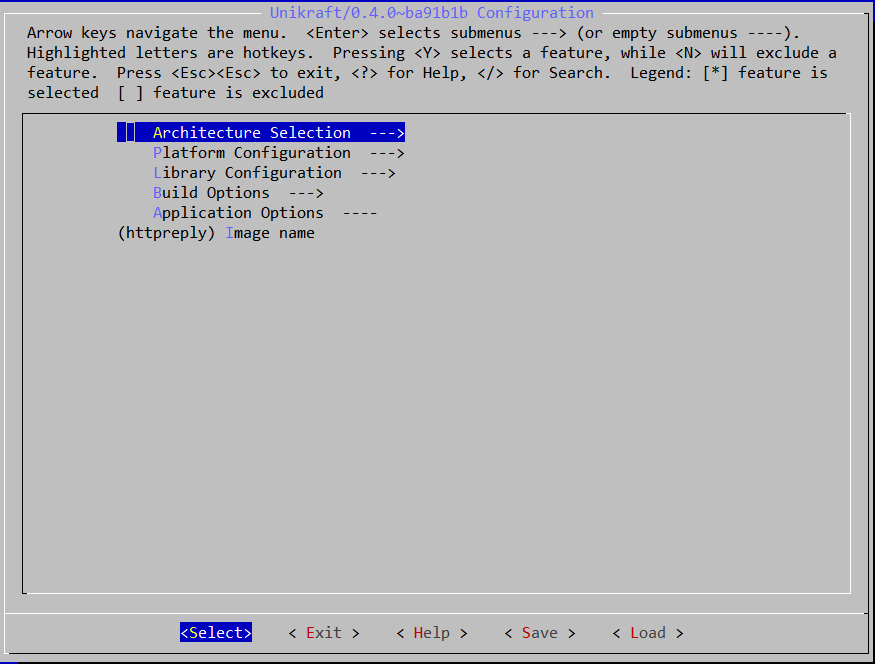

Once all necessary libraries are cloned, navigate to the application directory and run make menuconfig.

$ cd /path/to/apps/httpreply

$ make menuconfigThis will bring up a configuration screen like the one below.

Unikraft’s build system uses Linux’s Kconfig, allowing interactive configuration of the build image.

Now, as mentioned earlier, since I have an aarch64/Xen environment, I tried to specify the architecture and platform from the configuration screen.

Huh… it’s not there…

The aarch64/Xen combination is missing. aarch32/Xen and aarch64/KVM are supported, though… It seems that as of February 2020, Unikraft does not yet support the aarch64/Xen combination.

Since research time was limited, I gave up and decided to set up a virtual machine (VirtualBox) on my development machine, create a Xen environment on it, and try building and running a Unikernel with the currently supported x86_64/Xen combination.

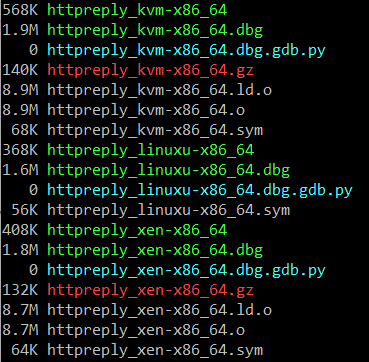

In the menuconfig I launched earlier, I specified x86_64/Xen and confirmed that lwip was specified as an external library. After configuring and exiting, a .config file was generated in the current directory. Running make in this state generated a build image for the specified architecture and platform in the build directory.

$ make

$ ls -sh build/

Then, I launched the Unikernel in domU.

$ xl create -c httpreply_xen-x86_64A server that simply receives and returns HTTP responses started up!

Investigating Unikraft’s Operation/Internal Structure with GDB

To investigate how Unikraft implements the hierarchical structure of libraries and build system shown earlier, I will try step-debugging with GDB. First, I’ll build the officially supported helloworld app for x86_64/Xen and x86_64/KVM using the same procedure as before. I’ll set Optimization Level to “No optimization” and Debugging information to “Level 3”.

First, to run the x86_64/Xen image, I send the build image to dom0 on the virtual machine. Since I want the source for GDB this time, I’ll send the entire project. Generally, to debug domU from dom0 using GDB, you launch gdbsx (GDB server for Xen) as a process in dom0 (the following procedure follows the Debugging chapter of the Unikraft tutorial).

First, similar to httpreply, write the configuration file and launch the Unikernel domain as domU. At this time, pause execution with the -p option and check the domain ID with xl list.

$ xl create -c -p helloworld.cfg

$ xl listFrom another terminal, enter dom0 and launch gdbsx.

$ gdbsx -a <domain id> <architecture bit width(64)> <port (9999)>From yet another terminal, enter dom0 and connect the GDB client. Specify the file with the .dbg extension.

$ gdb --eval-command="target remote :9999" path/to/helloworld_xen-x86_64.dbgThis starts the GDB session. Since I’m running an image built on a different host, I’ll correct the path to the source code.

(gdb) set substitute-path <prior path> <current path>Next, for comparison, I’ll run the Unikernel on KVM. The following procedure follows the documentation hosted in the Unikraft repository.

First, launch the Unikernel VM on KVM. The -s option is a shorthand for -gdb tcp::1234.

$ qemu-system-x86_64 -s -S -cpu host -enable-kvm -m 128 -nodefaults -no-acpi -display none -serial stdio -device isa-debug-exit -kernel path/to/helloworld_kvm-x86_64 -append verboseFrom another terminal, connect the GDB client.

$ gdb --eval-command="target remote :1234" path/to/helloworld_kvm-x86_64.dbgWhen debugging code after the boot code (after __libkvmplat_start32), set a hardware breakpoint. Also, set the CPU architecture correctly.

(gdb) hbreak <location>

(gdb) continue

(gdb) disconnect

(gdb) set arch <cpu architecture>

(gdb) target remote localhost:1234

(gdb) continueNow that the x86_64-compatible helloworld app is running on both Xen and KVM, I’ll step through both to investigate Unikraft’s structure.

Below is the stack trace output when calling the printf function and the stack has deepened to some extent (full paths were too long and hard to read, so I rewrote them as relative paths).

printf() on x86_64/Xen

#0 HYPERVISOR_event_channel_op (cmd=4, op=0x15f9b0)

at unikraft/plat/xen/include/xen-x86/hypercall64.h:255

#1 0x00000000000074fa in notify_remote_via_evtchn (port=2)

at unikraft/plat/xen/include/common/events.h:98

#2 0x00000000000076c1 in hv_console_output (str=0x15fa50 "Hello world!\n", len=13)

at hello_unikraft/unikraft/plat/xen/hv_console.c:179

#3 0x0000000000007404 in ukplat_coutk (str=0x15fa50 "Hello world!\n", len=13)

at unikraft/plat/xen/console.c:92

#4 0x000000000000c811 in vfprintf (fp=0x1c168 <stdout>, fmt=0x18e46 "Hello world!\n", ap=0x15fea0)

at unikraft/lib/nolibc/stdio.c:443

#5 0x000000000000c929 in vprintf (fmt=0x18e46 "Hello world!\n", ap=0x15fea0)

at unikraft/lib/nolibc/stdio.c:466

#6 0x000000000000c9ca in printf (fmt=0x18e46 "Hello world!\n")

at unikraft/lib/nolibc/stdio.c:475

#7 0x0000000000009246 in main (argc=1, argv=0x60000 <argv.2687>)

at apps/helloworld/main.c:8printf() on x86_64/KVM

#0 inb (port=1021)

at unikraft/plat/common/include/x86/cpu.h:219

#1 0x0000000000108c32 in serial_tx_empty ()

at unikraft/plat/kvm/x86/serial_console.c:56

#2 0x0000000000108c4d in serial_write (a=72 'H')

at unikraft/plat/kvm/x86/serial_console.c:61

#3 0x0000000000108c8f in _libkvmplat_serial_putc (a=72 'H')

at unikraft/plat/kvm/x86/serial_console.c:71

#4 0x0000000000107c71 in ukplat_coutk (buf=0x7f1fa50 "Hello world!\n", len=13)

at unikraft/plat/kvm/x86/console.c:67

#5 0x000000000010ec85 in vfprintf (fp=0x11f0c8 <stdout>,

fmt=0x11bc2c "Hello world!\n", ap=0x7f1fea0)

at unikraft/lib/nolibc/stdio.c:443

#6 0x000000000010ed9d in vprintf (fmt=0x11bc2c "Hello world!\n", ap=0x7f1fea0)

at unikraft/lib/nolibc/stdio.c:466

#7 0x000000000010ee3e in printf (fmt=0x11bc2c "Hello world!\n")

at unikraft/lib/nolibc/stdio.c:475

#8 0x000000000010b6ba in main (argc=2, argv=0x1245a0 <argv>)

at apps/helloworld/main.c:8In a printf() call, nolibc’s printf(), one of the libraries hosted in Unikraft’s main repository, is called. The Unikraft project hosts newlib, an implementation of libc, as an external library. However, if newlib is not cloned as an external library and explicitly specified in menuconfig, nolibc is linked to make libc APIs available.

Subsequently, functions that would typically be accessed via system calls in a general OS are accessed by ordinary function calls. The call diverges for x86_64 and KVM at ukplat_coutk(), called from vprintf(). Platform-specific implementations under the plat directory are called respectively. In the diverged paths, an inb instruction is issued for KVM, and a hypercall is issued for Xen.

As seen above, Unikraft groups platform-dependent processing under the plat directory. At build time, it links with the object files corresponding to the target platform, effectively separating platform-dependent and platform-independent code. It was also found that libc defaults to linking nolibc, hosted under libs in the Unikraft main repo.

Next, to investigate how it integrates with external libraries and ensures library extensibility, I’ll look at the stack trace when the httpreply app calls socket. The environment is x86_64/KVM.

#0 ukplat_lcpu_restore_irqf (flags=flags@entry=0)

at unikraft/plat/kvm/x86/lcpu.c:61

#1 0x000000000012647d in uk_waitq_wake_up (wq=0x15e190 <lock_tcpip_core+16>)

at unikraft/lib/uksched/include/uk/wait.h:139

#2 uk_mutex_unlock (m=m@entry=0x15e180 <lock_tcpip_core>)

at unikraft/lib/uklock/include/uk/mutex.h:124

#3 sys_mutex_unlock (mtx=mtx@entry=0x15e180 <lock_tcpip_core>)

at libs/lwip/mutex.c:74

#4 0x000000000013a266 in tcpip_send_msg_wait_sem (

fn=0x138110 <lwip_netconn_do_newconn>, apimsg=apimsg@entry=0x1dfee0,

sem=sem@entry=0x7fe3028)

at apps/httpreply/build/liblwip/origin/lwip-2.1.2/src/api/tcpip.c:443

#5 0x0000000000135d61 in netconn_apimsg (apimsg=0x1dfee0, fn=<optimized out>)

at apps/httpreply/build/liblwip/origin/lwip-2.1.2/src/api/api_lib.c:131

#6 netconn_new_with_proto_and_callback (t=t@entry=NETCONN_TCP,

proto=proto@entry=0 '\000', callback=callback@entry=0x13b1a0 <event_callback>)

at apps/httpreply/build/liblwip/origin/lwip-2.1.2/src/api/api_lib.c:161

#7 0x000000000013cb81 in lwip_socket (domain=domain@entry=2, type=type@entry=1,

protocol=protocol@entry=0)

at apps/httpreply/build/liblwip/origin/lwip-2.1.2/src/api/sockets.c:1716

#8 0x000000000012789c in socket (domain=domain@entry=2, type=type@entry=1,

protocol=protocol@entry=0) at libs/lwip/sockets.c:335

#9 0x000000000014448e in main (argc=<optimized out>, argv=<optimized out>) at apps/httpreply/main.c:65Tracing the socket() call reveals that most of the processing calls the implementation of lwip, an open-source library downloaded from outside the Unikraft project. However, the socket() call interface and sys_mutex_unlock() call implementations from glue code in the unikraft/libs/lwip repository, hosted by the Unikraft project.

Furthermore, Unikraft-specific OS functions like locking mechanisms, such as uk_mutex_unlock(), call the implementation in the Unikraft main repository.

Thus, when using existing external libraries like lwip, the Unikraft project hosts only the implementations of parts that are likely to be platform-dependent or Unikraft OS-dependent. The unikraft/libs/lwip repository within the Unikraft project hosts glue code for using existing implementations from outside the project.

Now, officially supported features in Unikraft project repositories, like lwip, can be used without effort. However, if your project’s app wants to use unsupported features, you must port the external library placed under the libs directory yourself.

As mentioned earlier, Unikraft seems to expect developers to contribute to this external library. However, to download an existing library from outside the Unikraft project and make it usable from a Unikraft app, it must be integrated into Unikraft’s Kconfig-based build system.

Below, referencing the Unikraft project’s unikraft/libs/lwip repository, we will look at the work required to use an existing library from outside Unikraft as a Unikraft library. For turning an existing library into a Unikraft library, I am referencing slides by NEC and the Xen Project page. A conceptual diagram of the build system is shown below.

The top directory of a Unikraft library primarily requires the following two files:

- Config.uk

- Makefile.uk

Config.uk is necessary for adding configuration options in menuconfig and is written in KConfig syntax. As seen in the Config.uk in the unikraft/libs/lwip repository, it is written with syntax like:

menuconfig LIBMYLIB

bool "ukmylib: lib description"

# libraries are off as default

default n

# dependencies

select LIBNOLIBC if !HAVE_LIBC

if LIBMYLIB

# list of configuration parameters

config SETTING_ENTRY

[type] "[description]"

default [value]

select LIBOTHER

endifThis allows selection and configuration of libraries, and description of dependencies from menuconfig.

Makefile.uk is a file that describes all the processing necessary for building the library and is written in Makefile syntax. As seen in the unikraft/libs/lwip repository, it describes:

- Library registration (adding to the library list and defining various variables)

- Download URL and patch specification for external library sources

- Library include path specification

- Source code file specification

(Pre-built binaries can also be specified as link targets).

Other than these two files, patch files and glue code for Unikraft OS-dependent parts need to be hosted. Each file of the hosted glue code is registered with the build system by the source code file specification in Makefile.uk.

As seen above, adding an external library from outside the Unikraft project to Unikraft’s external libraries primarily requires effort in writing glue code for Unikraft OS-dependent parts and creating patches for the downloaded original library. For developers with an understanding of Unikraft’s internals, this seems to be a mechanism that allows for relatively smooth porting.

UKL (Unikernel Linux)

While researching, I found a project aiming to achieve similar goals as Unikraft. In May 2019, the paper “Unikernels: The Next Stage of Linux’s Dominance” [4] introduced UKL (Unikernel Linux), a project attempting to create a mechanism to build Unikernels by using the source code of the existing Linux kernel as a library.

UKL also states that making it easy to port existing applications is crucial for increasing Unikernel adoption and proposes using the existing Linux kernel codebase to enrich libraries as a means to this end.

In other words, the UKL project sets the following goals:

- Most apps and user libraries should run on a Unikernel without modification. That is, simply changing the GCC target should allow building the target app as a Unikernel image.

- Avoid overhead from ring transitions. That is, kernel functions should be accessible by simple function calls, not system call traps.

- Enable optimizations spanning the kernel and app layers.

- Changes to the Linux source code should be minimal to be acceptable upstream.

To examine the feasibility of achieving these, a prototype was implemented in [4]. The changes to the Linux kernel and its surroundings in the prototype are listed below:

- Added a kernel configuration option to select compilation as UKL.

- Changed app code invocation to call the app’s main function directly without creating a process.

- Added a UKL library for direct function calls instead of syscall issuance.

- Modified glibc to use the above UKL library instead of issuing syscalls.

- Modified the kernel’s linker script.

- Added some initialization code before calling the app.

- Changed kernel linking to link the app / glibc / UKL library into a single binary.

With these changes, it is stated that, apart from still including the entire Linux kernel in the image and other minor corrections, they were able to build a Unikernel for the target app. Furthermore, they claim that an upstream merge is possible based on the small amount of code changes.

Summary

As of February 2020, many projects exist to build applications written for specific purposes or in specific languages as Unikernels. However, adoption has been slow, mainly due to the high cost of porting existing applications.

Projects like Unikraft and Unikernel Linux are progressing with the intent to solve this challenge. Unikraft aims to enrich its libraries through an easily extensible build system, but a concern is that the cost of supporting Unikraft itself and external library version upgrades will increase with the growth of officially hosted libraries.

Unikernel Linux, on the other hand, has the asset of abundant existing code supported by the Linux community, but if it is not merged into the Linux upstream, the cost of continuously maintaining patches will arise.

If Unikraft or Unikernel Linux can achieve their ideal states, it is highly possible that Unikernels will be introduced into embedded development using hypervisors and applications running in the cloud.

References (Papers)

[1] Anil Madhavapeddy et al. (2013) “Unikernels: Library Operating Systems for the Cloud” ACM SIGARCH Computer Architecture News

[2] André Hergenhan and Gernot Heiser. (2008) “Operating Systems Technology for Converged ECUs”

[3] Lee Pike et al. (2015) “Securing the Automobile: a Comprehensive Approach” Galois Inc. Technical Report

[4] Ali Raza et al. (2019) “Unikernels: The Next Stage of Linux’s Dominance” HotOS ’19: Proceedings of the Workshop on Hot Topics in Operating Systems