Executive Summary

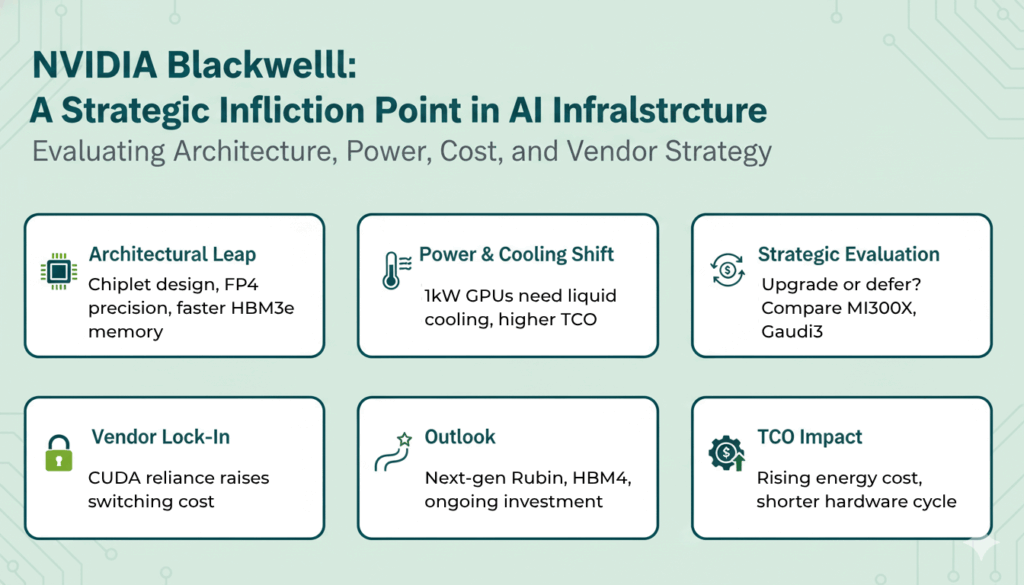

NVIDIA’s Blackwell platform represents a significant step beyond the Hopper architecture, driven by a new chiplet design and FP4 precision for AI inference. Adopting Blackwell is not a simple GPU swap but a strategic data center decision, mandating a shift to liquid cooling due to increased power demands of up to 1kW per GPU. Consequently, a rigorous evaluation of Blackwell’s Total Cost of Ownership (TCO) against potent, and potentially more capital-efficient, alternatives from AMD and Intel is now a fiduciary necessity.

1. Introduction: The New Imperative in AI Infrastructure

Continuous performance gains in artificial intelligence no longer come from simply making chips faster; they depend on fundamental architectural innovation. For the past several years, NVIDIA’s Hopper architecture, powering the H100 and H200 GPUs, has served as the industry’s baseline for training and deploying large-scale AI models. It established a standard for performance that has defined the current generation of AI data centers.

The Blackwell platform is NVIDIA’s successor to Hopper, engineered to meet the escalating demands of trillion-parameter models and massive inference workloads. This article provides a strategic analysis of Blackwell’s key architectural changes, its significant infrastructure implications, and the critical factors that CTOs and infrastructure leaders must consider. We will examine the mandatory shifts in power and cooling, the true Total Cost of Ownership (TCO) beyond the chip price, and the competitive landscape shaping the future of AI hardware.

2. Blackwell’s Core Architectural Shifts from Hopper

The performance leap from Hopper to Blackwell is rooted in three fundamental changes to the GPU’s design, data processing capabilities, and connectivity.

2.1. From Monolithic Die to a Chiplet Design

Unlike the single-die design of the Hopper GPU, Blackwell is built using a “chiplet” approach. It connects two large 800mm² dies, allowing them to function as a single, unified GPU. This design choice overcomes the physical manufacturing limits of semiconductor fabrication, effectively doubling the available silicon. According to analyses from 3DInCites, this integration is made possible by TSMC’s advanced CoWoS-L (Chip-on-Wafer-on-Substrate-L)—a complex packaging technology that is key to Blackwell’s design and provides the high-speed, low-latency connection required between the two dies.

2.2. Second-Generation Transformer Engine and FP4 Precision

Blackwell introduces the second-generation Transformer Engine, which includes a critical new feature: support for FP4, a 4-bit floating-point data format. As detailed in NVIDIA’s developer blog, FP4 is engineered to dramatically increase performance and efficiency for large language model (LLM) inference. By reducing the data size for each parameter, this format decreases the memory footprint and computational load, allowing models to run faster and more economically without significant accuracy loss.

2.3. Enhanced Memory and Interconnect

Blackwell significantly increases memory bandwidth using HBM3e, while aligning with the broader industry roadmap toward HBM4 for future architectures like Rubin. This memory upgrade is essential for keeping the GPU’s massive processing cores supplied with data. To complement this, an enhanced NVLink interconnect improves communication speed between multiple GPUs, a critical factor for efficiently training the massive AI models that define the current state of the art.

| Feature | Hopper (H100) | Blackwell (B200) |

| Design | Monolithic | Chiplet (Dual-Die) |

| Key AI Precision Format | FP8 | FP4 |

| Power Consumption (Approx.) | 700W | 1kW |

3. The Real Cost of Power: Infrastructure Implications for Blackwell

The transition to Blackwell extends far beyond the server rack, imposing new requirements on the data center’s physical infrastructure that carry significant financial implications. The move to a dual-die chiplet architecture, while enabling Blackwell’s performance leap, is also the direct cause of its 1kW thermal design point. This architectural decision fundamentally couples Blackwell’s performance gains to the non-negotiable requirement for direct liquid cooling, making the GPU and the data center retrofitting a single, inseparable procurement decision.

3.1. A New Power and Thermal Threshold

Blackwell GPUs are projected to consume up to 1 kilowatt (kW) of power each, a substantial 40% increase from the 700W TDP of the Hopper architecture. As noted by Navitas Semiconductor, this level of power density generates a thermal load that makes traditional air cooling ineffective for high-density deployments. Attempting to cool racks of 1kW GPUs with air would require impractical amounts of airflow and energy, creating a hard limit on computational density.

3.2. The Mandatory Shift to Liquid Cooling

To manage this intense thermal output, adopting Blackwell at scale necessitates a facility-wide transition to Direct Liquid Cooling (DLC). NVIDIA’s own analysis claims this shift can boost water efficiency by over 300 times compared to conventional cooling methods. Industry partners like Supermicro and nVent are already producing new DLC systems specifically engineered for Blackwell-based servers, confirming that the ecosystem is retooling for a liquid-cooled future. This is not an optional upgrade but a prerequisite for deploying the platform effectively.

3.3. Quantifying the Total Cost of Ownership (TCO)

The sticker price of a Blackwell GPU is only the starting point. A failure to model these second-order infrastructure costs—which can often approach or exceed the initial hardware outlay—is the most common cause of budget overruns in next-generation AI deployments. Based on general AI data center cost models from sources like Appinventiv, a true TCO analysis must include the cost of retrofitting facilities with liquid cooling plumbing, upgrading power distribution units to handle the increased load, and factoring in higher recurring electricity costs.

- Blackwell Total Cost of Ownership (TCO) Factors

- GPU Acquisition Cost

- Data Center Retrofit (Liquid Cooling Installation)

- Upgraded Power Delivery Infrastructure

- Increased Annual Electricity Costs

- Specialized Maintenance & Training

4. A Strategic Decision Framework for CTOs

Deciding whether and when to upgrade to Blackwell requires a careful evaluation of workloads, budgets, and the competitive market.

4.1. When an Upgrade to Blackwell Is Justified

An upgrade is justified for organizations where training state-of-the-art foundation models or achieving market-leading inference latency is a direct driver of competitive advantage and revenue. For these use cases, the performance gains can warrant significant capital investment. The platform’s capabilities were validated in recent MLPerf results, where, according to MLCommons, partners submitted the largest-ever results on NVIDIA GB200 Grace Blackwell Superchips, demonstrating the architecture’s readiness for extreme-scale workloads.

4.2. When Sticking with Hopper or Alternatives Makes Sense

Organizations whose current AI workloads are well-served by the performance of the Hopper architecture should carefully consider deferring a Blackwell upgrade. If the existing infrastructure cannot support the power and cooling demands without a complete overhaul, the capital expenditure may be prohibitive. For these users, continuing with Hopper or exploring a partial or mixed-generation deployment offers a more financially viable strategy without sacrificing necessary performance.

4.3. The Competitive Landscape: A Balanced View

NVIDIA does not operate in a vacuum. Strong alternatives from AMD and Intel offer compelling options that merit serious consideration.

- AMD: The AMD Instinct MI300X has emerged as a direct and powerful competitor to NVIDIA’s H100, with benchmarks from Next Platform confirming its competitive performance in key AI workloads. Looking forward, AMD has positioned its upcoming MI350 accelerator as its direct answer to Blackwell.

- Intel: The Intel Gaudi 3 accelerator positions itself as a value-focused alternative. It is priced lower than the H100 and, while it offers lower absolute performance, it presents a viable option for businesses prioritizing a lower total cost of ownership (TCO) for specific workloads.

| Vendor | Current Flagship | Strategic Position |

| NVIDIA | Blackwell B200 | Top-tier performance, requires infrastructure overhaul |

| AMD | Instinct MI300X | Strong performance competitor to H100 |

| Intel | Gaudi 3 | TCO-focused alternative |

5. Navigating the CUDA Ecosystem and Vendor Lock-In

NVIDIA’s most durable strategic advantage is not its hardware but its mature and comprehensive CUDA software platform. This ecosystem creates significant vendor lock-in, making it difficult for organizations to switch to competing hardware without substantial software re-engineering. This dependency on the CUDA ecosystem must be treated as a strategic risk, limiting long-term hardware optionality and reducing leverage in price negotiations. CTOs should mandate pilot programs for hardware-agnostic platforms like those from Modular to quantify the switching costs and develop a credible long-term de-risking strategy.

6. Future Outlook: Beyond Blackwell

The pace of AI hardware innovation continues to accelerate. NVIDIA has already announced its post-Blackwell roadmap, with the “Rubin” architecture slated to follow. This forward-looking plan aligns with a broader industry trend of adopting next-generation technologies like HBM4 memory, a move corroborated by announcements from memory manufacturers such as SK hynix. This context positions Blackwell not as an endpoint, but as one major step in a rapid and continuous evolution of AI infrastructure that will demand ongoing strategic investment.

7. Conclusion: A Strategic Inflection Point for AI Infrastructure

NVIDIA’s Blackwell platform is an engineering marvel that pushes the boundaries of AI computation. However, its adoption is fundamentally an infrastructure and TCO decision, not merely a performance upgrade. The step up to 1kW of power per GPU and the corresponding mandatory shift to direct liquid cooling mark a new and more demanding era for data center design and operation.

The right choice for any organization will depend on a rigorous assessment of its specific workloads, capital budget constraints, and tolerance for vendor lock-in. A careful evaluation of Blackwell’s formidable capabilities against the increasingly strong and strategically diverse alternatives from AMD and Intel is no longer just an option—it is an essential component of any future-proof AI infrastructure strategy.

8. References

- MLCommons. (2025, September 9). MLPerf Inference v5.1 Benchmark Results. https://mlcommons.org/2025/09/mlperf-inference-v5-1-results/

- PR Newswire. (2025, June 4). CoreWeave, NVIDIA and IBM Submit Largest-Ever MLPerf Results on NVIDIA GB200 Grace Blackwell Superchips. https://www.prnewswire.com/news-releases/coreweave-nvidia-and-ibm-submit-largest-ever-mlperf-results-on-nvidia-gb200-grace-blackwell-superchips-302473361.html

- NVIDIA. (2025). The Engine Behind AI Factories | NVIDIA Blackwell Architecture. https://www.nvidia.com/en-us/data-center/technologies/blackwell-architecture/

- Navitas Semiconductor. (2025). Blackwell Will Be 1kW. https://navitassemi.com/nvidias-grace-hopper-runs-at-700-w-blackwell-will-be-1-kw-how-is-the-power-supply-industry-enabling-data-centers-to-run-these-advanced-ai-processors/

- NVIDIA. (2025, April 22). NVIDIA Blackwell Platform Boosts Water Efficiency by Over 300x. NVIDIA Developer Blog. https://blogs.nvidia.com/blog/blackwell-platform-water-efficiency-liquid-cooling-data-centers-ai-factories/

- NVIDIA. (2025, June 24). Introducing NVFP4 for Efficient and Accurate Low-Precision Inference. NVIDIA Developer Blog. https://developer.nvidia.com/blog/introducing-nvfp4-for-efficient-and-accurate-low-precision-inference/

- Hilliard, T. (2024, October 7). The First AI Benchmarks Pitting AMD Against Nvidia. The Next Platform. https://www.nextplatform.com/2024/09/03/the-first-ai-benchmarks-pitting-amd-against-nvidia/

- Supermicro. (2025, August 11). Supermicro Expands Its NVIDIA Blackwell System Portfolio. https://www.supermicro.com/en/pressreleases/supermicro-expands-its-nvidia-blackwell-system-portfolio-new-direct-liquid-cooled-dlc

- Appinventiv. (2025). Cloud Migration Costs. https://appinventiv.com/blog/cloud-migration-costs/

- AIM Media House. (2025, September 25). Modular Raises $250M to Break Nvidia’s AI Chip Monopoly. https://aimmediahouse.com/ai-startups/modular-raises-250m-to-break-nvidias-ai-chip-monopoly

- nVent. (2025). 2025 Liquid Cooling Best Practices. https://www.nvent.com/en-us/data-solutions/2025-liquid-cooling-best-practices

- 3DInCites. (2025, April). Why Nvidia’s Blackwell is Having Issues with TSMC CoWoS-L. https://www.3dincites.com/2024/10/iftle-607-why-nvidias-blackwell-is-having-issues-with-tsmc-cowos-l-technology/

- SK hynix. (2025, September 12). SK hynix Completes World’s First HBM4 Development and Readies Mass Production. https://news.skhynix.com/sk-hynix-completes-worlds-first-hbm4-development-and-readies-mass-production/