* This blog post is an English translation of an article originally published in Japanese on April 10, 2025.

According to the press release, Llama 4 Scout can be loaded onto a single NVIDIA H100 with INT4 quantization and can handle up to 10 million input tokens. However, as introduced in a previous article, it was not possible to fit it entirely on a single H100 using vLLM, nor could we input 10 million tokens. Therefore, this time, we will investigate whether these claims can be achieved using the officially provided scripts.

Environment Setup

We will set up the environment according to the official procedure. First, install the llama-stack library.

uv venv -p 3.11

. .venv/bin/activate

uv pip install llama-stackNext, download the model. This time, instead of going through HuggingFace, we will download it directly from https://llama.meta.com/llama-downloads/. After entering the required information, a download link will be sent via email.

The download destination will be under ~/.llama, so please be mindful of free disk space and use symbolic links if necessary.

The download command is as follows:

llama download --source meta --model-id Llama-4-Scout-17B-16E-Instruct --meta-url <download_link>Next, install the llama-models library to run the model.

git clone git@github.com:meta-llama/llama-models.git

cd llama-models

pip install .[torch]To run the model with FP16 precision, execute the following script described in the README.

#!/bin/bash

NGPUS=4

CHECKPOINT_DIR=~/.llama/checkpoints/Llama-4-Scout-17B-16E-Instruct

PYTHONPATH=$(git rev-parse --show-toplevel) \

torchrun --nproc_per_node=$NGPUS \

-m models.llama4.scripts.chat_completion $CHECKPOINT_DIR \

--world_size $NGPUSFP8 quantization can also be run similarly with the script from the README. It runs in an environment with 2 H100 GPUs.

MODE=fp8_mixed # or int4_mixed

if [ $MODE == "fp8_mixed" ]; then

NGPUS=2

else

NGPUS=1

fi

CHECKPOINT_DIR=~/.llama/checkpoints/Llama-4-Scout-17B-16E-Instruct

PYTHONPATH=$(git rev-parse --show-toplevel) \

torchrun --nproc_per_node=$NGPUS \

-m models.llama4.scripts.chat_completion $CHECKPOINT_DIR \

--world_size $NGPUS \

--quantization-mode $MODEHowever, for INT4 quantization, the above script as-is (just by modifying the MODE variable) resulted in an error, but we confirmed that it works with the patch from https://github.com/meta-llama/llama-models/pull/316.

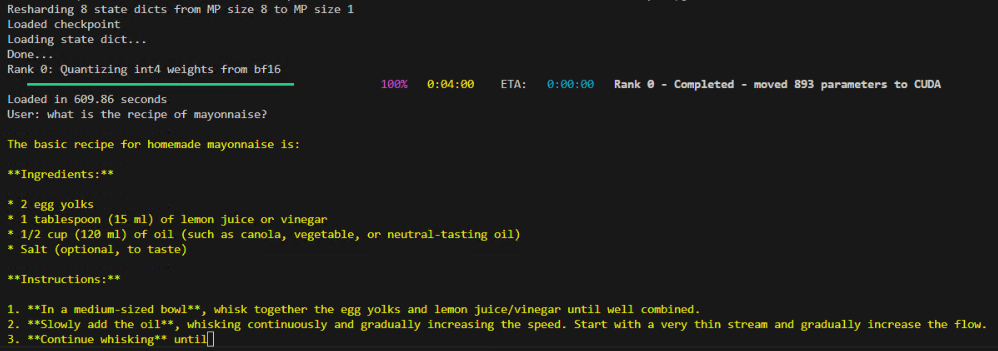

When it runs, you can see text being generated as shown below.

Verification

As can be seen from the implementation, the default context length is set to 4096. We will investigate the behavior when increasing the context length by adding the --max-seq-len argument as shown in the following script.

# Specify the GPU to use

export CUDA_VISIBLE_DEVICES=0

NGPUS=1

# Specify the context length

SEQ_LEN=10000

# If running on another GPU on the same machine, specify the torchrun port individually

MASTER_PORT=29004

# Execute inference with INT4 quantization

MODE=int4_mixed

CHECKPOINT_DIR=~/.llama/checkpoints/Llama-4-Scout-17B-16E-Instruct

PYTHONPATH=$(git rev-parse --show-toplevel) \

torchrun --nproc_per_node=$NGPUS --master-port $MASTER_PORT \

-m models.llama4.scripts.chat_completion $CHECKPOINT_DIR \

--world_size $NGPUS \

--quantization-mode $MODE \

--max-seq-len $SEQ_LENThe results of a binary search for context length when using 1, 2, 4, and 8 GPUs are shown in the following table.

| Number of H100 GPUs | Maximum context length that worked | Minimum context length that did not work |

| 1 | 35,000 | 40,000 |

| 2 | 400,000 | 600,000 |

| 4 | 1,000,000 | 1,200,000 |

| 8 | 2,500,000 | 3,000,000 |

The maximum context length when using one H100 was limited to 35,000 tokens, and even with 8 H100s, the maximum was 2.5 million tokens. Compared to FP8 quantized inference using vLLM, it operated with less memory and achieved a longer context length, but we could not achieve the 10 million token input mentioned in the press release. Assuming that context length is proportional to memory capacity, it is estimated that 32 or more H100s would be required to achieve 10 million token input with this method.

Summary

This time, we confirmed, following the official procedure, that the part of the Llama 4 press release stating it “can be loaded onto a single H100 GPU” is correct. We could not verify the “can handle 10 million token input” part, but we estimated the number of GPUs required to achieve it.

In the future, we will investigate options suitable for efficient deployment by examining the implementation and profiling.

[Ad] Optimize your AI model performance with our Performance Engineering Platform – Fixstars AIBooster.

Fixstars AIBooster for GPU servers gathers runtime data, identifies bottlenecks, and enhances performance with actionable insights.

Learn more: Fixstars AIBooster Product Page